System Integrations

- Cyber Incident Monitoring Integration Procedure

- Microsoft 365

- GitHub

- Add Windows Integrations

- Sysmon for Linux

- 1 Password Integrations

- Atlassian Bitbucket Integrations

- AWS Cloudtrails Integrations

- AWS GuardDuty Integrations

- AWS Security Hub Integrations

- AWS Integrations

- CISCO Meraki Integrations

- CISCO Secure Endpoint Integrations

- CISCO Umbrella Integrations

- Cloudflare Integration

- Crowdstrike Integrations

- Dropbox Integrations

- F5 Integrations

- Fortinet-Fortigate Integrations

- GCP Integrations

- GitLab Integrations

- Google Workspace Integrations

- Jumpcloud Integrations

- Mimecast Integrations

- MongoDB Integrations

- OKTA Integrations

- Pulse Connect Secure Integrations

- Slack Integrations

- System Integrations

- Team Viewer Integrations

- Z Scaler Integrations

- gcp

- VMware vSphere Integration

- SentinelOne Integrations

- Custom Windows Event Logs - Integration

- Windows Event Forwarding to Linux server using Nxlog

- Windows Event Forwarding to Linux server using Powershell script

- Sophos Integration

- Atlassian Bitbucket Integrations (New)

- Palo Alto Cortex XDR Integration

- Active Directory Integrations

- Microsoft SQL Server Integration

- Azure Logs Integration

- ESET Protect Integration

- ESET Threat Intelligence Integrations

- CSPM for Azure Integration

- Resource Manager Endpoint Integration

- CISCO Secure Email Gateway Integrations

- CISCO Nexus Integrations

- BitDefender Integrations

- Bitwarden Integrations

- Forwarding logs from rsyslog client to a remote rsyslogs server

- New script for logs forwarding

Cyber Incident Monitoring Integration Procedure

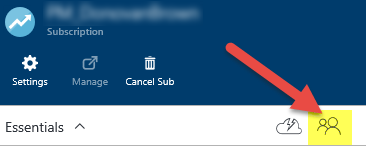

Go to > Cyber Incident Monitoring

Microsoft 365

Microsoft Office 365 integration currently supports user, admin, system, and policy actions and events from Office 365 and Azure AD activity logs exposed by the Office 365 Management Activity API.

Procedures

To perform the setup, please confirm that you have the following access:

-

A Microsoft Office 365 account with Administrative Privileges

-

A Microsoft Azure account with Administrative Privileges

Register a new Office 365 web application To get started collecting Office 365 logs, register an Office 365 web application:

-

Log into the Office 365 portal as an Active Directory tenant administrator.

-

Click Show all to expand the left navigation area, and then click Azure Active Directory.

-

Select App Registrations, and then click + New application registration.

-

Provide the following information in the fields:

-

-

-

Name – for example, o365cytech.

-

Select Single tenant for supported account types.

-

Leave the Redirect URI blank.

-

The Audit Log Search needs to be enabled.

-

Click Register and note the Application (client) ID.

-

-

Setup Active Directory security permissions

The Active Directory security permissions allow the application you created to read threat intelligence data and activity reports for your organization.

To set up Active Directory permissions:

- On the main panel under the new application, click API Permissions, and then click + Add a permission.

- Locate and click on Office 365 Management APIs.

- In Application permissions, expand and select ActivityFeed.Read, ActivityFeed.ReadDlp, ActivityReports.Read, and ServiceHealth.Read

- Ensure all necessary permissions are selected, and then click Add permissions.

- Click Grant admin consent, and then click Accept to confirm.

- On the left navigation area, select Certificates & secrets, and then click + New client secret.

- Make Sure to Copy the Value (Client Secret (Api Key) will disappear

- Type a key Description and set the duration to Never or Maximum Grant time.

- Click Add.

- Click Overview to return to the application summary, and then click the link under Managed application in local directory.

- Click Properties, and then note the Object ID associated with the application.

Steps to Renew the Client Secret (API Key):

-

Log into the Azure Portal:

- Go to the Azure Portal and log in using an account with administrative privileges.

-

Navigate to Azure Active Directory:

- In the left navigation pane, select Azure Active Directory.

- If it's not visible, click Show all to expand the list and find it.

-

Go to App Registrations:

- Under Azure Active Directory, select App Registrations.

- Find your registered application (e.g., "o365cytech") in the list, or use the search bar to locate it.

-

Open Certificates & Secrets:

- Click on the registered app to open its details page.

- In the left-hand menu, select Certificates & Secrets.

-

Generate a New Client Secret:

- Under Client Secrets, you'll see a list of previously created secrets, along with their expiration dates.

- Click + New client secret to create a new one.

-

Configure the New Secret:

- Enter a description for the new key (e.g., "Renewed Key for o365cytech").

- Set the duration for the new client secret:

-

Save and Copy the New Secret:

- Click Add.

- Once the new secret is generated, copy the value immediately. This is your new client secret (API key). The secret value will be hidden after you leave this page, so make sure to store it securely.

-

Update Any Services Using the Key:

- If any services or scripts are using the previous client secret, you'll need to update them with the new one.

-

Remove the Old Secret (Optional):

- If the old client secret is no longer needed, you can delete it to avoid confusion. Simply click the trash icon next to the old key under Client Secrets.

GitHub

Introduction

The GitHub integration collects events from the GitHub API.

Logs

Audit

The GitHub audit log records all events related to the GitHub organization.

To use this integration, you must be an organization owner, and you must use an Personal Access Token with the admin:org scope.

This integration is not compatible with GitHub Enterprise server.

Code Scanning

The Code Scanning lets you retrieve all security vulnerabilities and coding errors from a repository setup using Github Advanced Security Code Scanning feature.

To use this integration, GitHub Apps must have the security_events read permission. Or use a personal access token with the security_events scope for private repos or public_repo scope for public repos.

Secret Scanning

The Github Secret Scanning lets you retrieve secret scanning for advanced security alerts from a repository setup using Github Advanced Security Secret Scanning feature.

To use this integration, GitHub Apps must have the secret_scanning_alerts read permission. Or you must be an administrator for the repository or for the organization that owns the repository, and you must use a personal access token with the repo scope or security_events scope. For public repositories, you may instead use the public_repo scope.

Dependabot

The Github Dependabot lets you retrieve known vulnerabilities in dependencies from a repository setup using Github Advanced Security Dependabot feature.

To use this integration, you must be an administrator for the repository or for the organization that owns the repository, and you must use a personal access token with the repo scope or security_events scope. For public repositories, you may instead use the public_repo scope.

Issues

The Github Issues datastream lets you retrieve github issues, including pull requests, issue assignees, comments, labels, and milestones. See About Issues for more details. You can retrieve issues for specific repository or for entire organization. Since Github API considers pull requests as issues, users can use github.issues.is_pr field to filter for only pull requests.

All issues including closed are retrieved by default. If users want to retrieve only open requests, you need to change State parameter to open.

To use this integration, users must use Github Apps or Personal Access Token with read permission to repositories or organization. Please refer to Github Apps Permissions Required and Personal Access Token Permissions Required for more details.

GitHub Integration Procedures

Please provide the following information to CyTech:

1.Select Settings

2. Select Developer Settings

3. Select token (classic)

4. Select scope admin:scope

Collect GitHub logs via API

-

Personal Access Token - the GitHub Personal Access Token. Requires the 'admin:org' scope

-

Organization Name - The GitHub organization name/ID

GHAS Code Scanning

-

Personal Access Token - the GitHub Personal Access Token. Requires the 'public_repo' scope for public repositories and 'security_events' scope for private repositories. \nSee List code scanning alerts for a repository

-

Repository owner - The owner of GitHub Repository. If repository belongs to an organization, owner is name of the organization

GHAS Dependabot

-

Personal Access Token - The GitHub Personal Access Token. \nSee Authenticating with GraphQL

-

Repository owner - The owner of GitHub Repository

Github Issues

1. Personal Access Token - the GitHub Personal Access Token.

2. Repository owner - The owner of GitHub Repository. If repository belongs to an organization, owner is name of the organization.

GHAS Secret Scanning

1. Personal Access Token - the GitHub Personal Access Token. Requires admin access to the repository or organization owning the repository along with a personal access token with 'public_repo' scope for public repositories and repo or security_events scope for private repositories. \nSee List secret scanning alerts for a repository

2. Repository owner - The owner of GitHub Repository

Add Windows Integrations

Introduction

The Windows integration allows you to monitor the Windows OS, services, applications, and more.

Use the Windows integration to collect metrics and logs from your machine. Then visualize that data in Kibana, create alerts to notify you if something goes wrong, and reference data when troubleshooting an issue.

For example, if you wanted to know if a Windows service unexpectedly stops running, you could install the Windows integration to send service metrics to Elastic. Then, you could view real-time changes to service status in Kibana's [Metrics Windows] Services dashboard.

Data streams

The Windows integration collects two types of data: logs and metrics.

Logs help you keep a record of events that happen on your machine. Log data streams collected by the Windows integration include forwarded events, PowerShell events, and Sysmon events. Log collection for the Security, Application, and System event logs is handled by the System integration. See more details in the Logs reference.

-

https://aquila-elk.kb.us-east-1.aws.found.io:9243/app/integrations/detail/windows-1.15.2/overview#logs-reference

Metrics give you insight into the state of the machine. Metric data streams collected by the Windows integration include service details and performance counter values. See more details in the Metrics reference.

Note: For 7.11, security, application and system logs have been moved to the system package.

Assumptions

The procedures described in Section 3 assumes that a Log Collector has already been setup.

Requirements

You need Elasticsearch for storing and searching your data and Kibana for visualizing and managing it. You can use our hosted Elasticsearch Service on Elastic Cloud, which is recommended, or self-manage the Elastic Stack on your own hardware.

Each data stream collects different kinds of metric data, which may require dedicated permissions to be fetched and which may vary across operating systems.

Setup

For step-by-step instructions on how to set up an integration, see the Getting started guide.

Note: Because the Windows integration always applies to the local server, the hosts config option is not needed.

Ingesting Windows Events via Splunk

This integration allows you to seamlessly ingest data from a Splunk Enterprise instance. The integration uses the httpjson input in Elastic Agent to run a Splunk search via the Splunk REST API and then extract the raw event from the results. The raw event is then processed via the Elastic Agent. You can customize both the Splunk search query and the interval between searches. For more information see Ingest data from Splunk.

Note: This integration requires Windows Events from Splunk to be in XML format. To achieve this, renderXml needs to be set to 1 in your inputs.conf file.

Logs reference

Forwarded

The Windows forwarded data stream provides events from the Windows ForwardedEvents event log. The fields will be the same as the channel specific data streams.

Powershell

The Windows powershell data stream provides events from the Windows Windows PowerShell event log.

System Integration Procedures

Collect events from the following Windows event log channels: (Enable Yes/No)

-

Preserve original event (Enable Yes/No)

-

Preserves a raw copy of the original XML event, added to the field event.original

-

Event ID

-

A list of included and excluded (blocked) event IDs. The value is a comma-separated list. The accepted values are single event IDs to include (e.g. 4624), a range of event IDs to include (e.g. 4700-4800), and single event IDs to exclude (e.g. -4735). Limit 22 IDs.

-

Ignore events older than

-

If this option is specified, events that are older than the specified amount of time are ignored. Valid time units are "ns", "us" (or "µs"), "ms", "s", "m", "h".

-

Language ID

-

The language ID the events will be rendered in. The language will be forced regardless of the system language. A complete list of language IDs can be found https://docs.microsoft.com/en-us/openspecs/windows_protocols/ms-lcid/a9eac961-e77d-41a6-90a5-ce1a8b0cdb9c[here]. It defaults to 0, which indicates to use the system language. E.g.: 0x0409 for en-US

-

Tags

-

Processors

-

Processors are used to reduce the number of fields in the exported event or to enhance the event with metadata. This executes in the agent before the logs are parsed. See Processors for details.

-

Synthetic source (Enable Yes/No)

Powershell (Enable Yes/No)

-

Preserve original event (Enable Yes/No)

-

Preserves a raw copy of the original XML event, added to the field event.original

-

Event ID

-

A list of included and excluded (blocked) event IDs. The value is a comma-separated list. The accepted values are single event IDs to include (e.g. 4624), a range of event IDs to include (e.g. 4700-4800), and single event IDs to exclude (e.g. -4735). Limit 22 IDs.

-

Ignore events older than

-

If this option is specified, events that are older than the specified amount of time are ignored. Valid time units are "ns", "us" (or "µs"), "ms", "s", "m", "h".

-

Language ID

-

The language ID the events will be rendered in. The language will be forced regardless of the system language. A complete list of language IDs can be found https://docs.microsoft.com/en-us/openspecs/windows_protocols/ms-lcid/a9eac961-e77d-41a6-90a5-ce1a8b0cdb9c[here]. It defaults to 0, which indicates to use the system language. E.g.: 0x0409 for en-US

-

Tags

-

Processors

-

Processors are used to reduce the number of fields in the exported event or to enhance the event with metadata. This executes in the agent before the logs are parsed. See Processors for details.

-

Synthetic source (Enable Yes/No)

Powershell Operational (Enable Yes/No)

-

Preserve original event (Enable Yes/No)

-

Preserves a raw copy of the original XML event, added to the field event.original

-

Event ID

-

A list of included and excluded (blocked) event IDs. The value is a comma-separated list. The accepted values are single event IDs to include (e.g. 4624), a range of event IDs to include (e.g. 4700-4800), and single event IDs to exclude (e.g. -4735). Limit 22 IDs.

-

Ignore events older than

-

If this option is specified, events that are older than the specified amount of time are ignored. Valid time units are "ns", "us" (or "µs"), "ms", "s", "m", "h".

-

Language ID

-

The language ID the events will be rendered in. The language will be forced regardless of the system language. A complete list of language IDs can be found https://docs.microsoft.com/en-us/openspecs/windows_protocols/ms-lcid/a9eac961-e77d-41a6-90a5-ce1a8b0cdb9c[here]. It defaults to 0, which indicates to use the system language. E.g.: 0x0409 for en-US

-

Tags

-

Processors

-

Processors are used to reduce the number of fields in the exported event or to enhance the event with metadata. This executes in the agent before the logs are parsed. See Processors for details.

-

Synthetic source (Enable Yes/No)

Sysmon Operational (Enable Yes/No)

-

Preserve original event (Enable Yes/No)

-

Preserves a raw copy of the original XML event, added to the field event.original

-

Event ID

-

A list of included and excluded (blocked) event IDs. The value is a comma-separated list. The accepted values are single event IDs to include (e.g. 4624), a range of event IDs to include (e.g. 4700-4800), and single event IDs to exclude (e.g. -4735). Limit 22 IDs.

-

Ignore events older than

-

If this option is specified, events that are older than the specified amount of time are ignored. Valid time units are "ns", "us" (or "µs"), "ms", "s", "m", "h".

-

Language ID

-

The language ID the events will be rendered in. The language will be forced regardless of the system language. A complete list of language IDs can be found https://docs.microsoft.com/en-us/openspecs/windows_protocols/ms-lcid/a9eac961-e77d-41a6-90a5-ce1a8b0cdb9c[here]. It defaults to 0, which indicates to use the system language. E.g.: 0x0409 for en-US

-

Tags

-

Processors

-

Processors are used to reduce the number of fields in the exported event or to enhance the event with metadata. This executes in the agent before the logs are parsed. See Processors for details.

-

Synthetic source (Enable Yes/No)

Collect Windows perfmon and service metrics (Enable Yes/No)

-

Perfmon Group Measurements By Instance (Enable Yes/No)

-

Enabling this option will send all measurements with a matching perfmon instance as part of a single event

-

Perfmon Ignore Non Existent Counters (Enable Yes/No)

-

Enabling this option will make sure to ignore any errors caused by counters that do not exist

-

Perfmon Queries

-

Will list the perfmon queries to execute, each query will have an object option, an optional instance contiguration and the actual counters

-

Period

-

Synthetic source (Enable Yes/No)

Windows service metrics (Enable Yes/No)

-

Period

-

Processors

-

Processors are used to reduce the number of fields in the exported event or to enhance the event with metadata. This executes in the agent before the logs are parsed. See Processors for details.

-

Synthetic source (Enable Yes/No)

Collect logs from third-party REST API (experimental) (Enable Yes/No)

-

URL of Splunk Enterprise Server

-

i.e. scheme://host:port, path is automatic

-

Splunk REST API Username

-

Splunk Authorization Token

-

Bearer Token or Session Key, e.g. "Bearer eyJFd3e46..." or "Splunk 192fd3e...". Cannot be used with username and password.

-

SSL Configuration

-

i.e. certificate_authorities, supported_protocols, verification_mode etc.

Windows ForwardedEvents via Splunk Enterprise REST API (Enable Yes/No)

-

Interval to query Splunk Enterprise REST API

-

Go Duration syntax (eg. 10s)

-

Preserve original event (Enable Yes/No)

-

Preserves a raw copy of the original event, added to the field event.original

-

Splunk search string

-

Tags

-

Processors

-

Processors are used to reduce the number of fields in the exported event or to enhance the event with metadata. This executes in the agent before the logs are parsed. See Processors for details.

-

Synthetic source (Enable Yes/No)

Windows Powershell Events via Splunk Enterprise REST API (Enable Yes/No)

-

Interval to query Splunk Enterprise REST API

-

Go Duration syntax (eg. 10s)

-

Preserve original event (Enable Yes/No)

-

Preserves a raw copy of the original event, added to the field event.original

-

Splunk search string

-

Tags

-

Processors

-

Processors are used to reduce the number of fields in the exported event or to enhance the event with metadata. This executes in the agent before the logs are parsed. See Processors for details.

-

Synthetic source (Enable Yes/No)

Windows Powershell Operational Events via Splunk Enterprise REST API (Enable Yes/No)

-

Interval to query Splunk Enterprise REST API

-

Go Duration syntax (eg. 10s)

-

Preserve original event (Enable Yes/No)

-

Preserves a raw copy of the original event, added to the field event.original

-

Splunk search string

-

Tags

-

Processors

-

Processors are used to reduce the number of fields in the exported event or to enhance the event with metadata. This executes in the agent before the logs are parsed. See Processors for details.

-

Synthetic source (Enable Yes/No)

Windows Sysmon Operational Events via Splunk Enterprise REST API (Enable Yes/No)

-

Interval to query Splunk Enterprise REST API

-

Go Duration syntax (eg. 10s)

-

Preserve original event (Enable Yes/No)

-

Preserves a raw copy of the original event, added to the field event.original

-

Splunk search string

-

Tags

-

Processors

-

Processors are used to reduce the number of fields in the exported event or to enhance the event with metadata. This executes in the agent before the logs are parsed. See Processors for details.

-

Synthetic source (Enable Yes/No)

Sysmon for Linux

Introduction

The Sysmon for Linux integration allows you to monitor the Sysmon for Linux, which is an open-source system monitor tool developed to collect security events from Linux environments.

Use the Sysmon for Linux integration to collect logs from linux machine which has sysmon tool running. Then visualize that data in Kibana, create alerts to notify you if something goes wrong, and reference data when troubleshooting an issue.

NOTE: To collect Sysmon events from Windows event log, use Windows sysmon_operational data stream instead.

-

Sysmon for Linux - https://github.com/Sysinternals/SysmonForLinux

-

Windows sysmon_operational data stream - https://docs.elastic.co/en/integrations/windows#sysmonoperational

Assumptions

The procedures described in Section 3 assumes that a Log Collector has already been setup.

Requirements

Setup

For step-by-step instructions on how to set up an integration, see the Getting started guide.

Data streams

The Sysmon for Linux log data stream provides events from logs produced by Sysmon tool running on Linux machine.

Sysmon for Linux Integration

Please provide the following information to CyTech:

Collect Sysmon for Linux logs (Enable Yes/No)

-

Paths - /var/log/sysmon*

1 Password Integrations

Introduction

With 1Password Business, you can send your account activity to your security information and event management (SIEM) system, using the 1Password Events API.

Get reports about 1Password activity, such as sign-in attempts and item usage, while you manage all your company’s applications and services from a central location.

With 1Password Events Reporting and Elastic SIEM, you can:

-

Control your 1Password data retention

-

Build custom graphs and dashboards

-

Set up custom alerts that trigger specific actions

-

Cross-reference 1Password events with the data from other services

Events

Sign-in Attempts

Use the 1Password Events API to retrieve information about sign-in attempts. Events include the name and IP address of the user who attempted to sign in to the account, when the attempt was made, and – for failed attempts – the cause of the failure.

Item Usages

This uses the 1Password Events API to retrieve information about items in shared vaults that have been modified, accessed, or used. Events include the name and IP address of the user who accessed the item, when it was accessed, and the vault where the item is stored.

Requirements

You can set up Events Reporting if you’re an owner or administrator.

Ready to get started?

1Password Integration Procedures

Please provide the following information to CyTech:

The 1Password Events API Beat returns information from 1Password through requests to the Events REST API and sends that data securely to Elasticsearch. Requests are authenticated with a bearer token. Issue a token for each application or service you use.

To connect your 1Password account to Elastic:

-

Download and install the 1Password Events API Elastic Beat from the 1Password GitHub repository.

-

Download an example eventsapibeat.yml file .

-

Configure the YAML file for the Beat to include:

-

The bearer token you saved previously in the auth_token fields for each 1Password event type you plan to monitor.

-

The output for events (sent directly to Elasticsearch, or through Logstash).

-

Any other configurations you want to customize.

-

Run the following command: ./eventsapibeat -c eventsapibeat.yml -e

You can now use Elasticsearch with the 1Password Events API Beat to monitor events from your 1Password account. The returned data will follow the Elastic Common Schema (ECS) specifications.

Collect events from 1Password Events API

-

URL of 1Password Events API Server - options: https://events.1password.com, https://events.1password.ca, https://events.1password.eu, https://events.ent.1password.com. path is automatic

-

1Password Authorization Token - Bearer Token, e.g. "eyJhbGciO..."

Atlassian Bitbucket Integrations

Introduction

The Bitbucket integration collects audit logs from the audit log files or the audit API.

Reference: https://developer.atlassian.com/server/bitbucket/reference/rest-api/

Assumptions

The procedures described in Section 3 assume that a Log Collector has already been set up.

Requirements

For more information on auditing in Bitbucket and how it can be configured, see View and configure the audit log on Atlassian's website.

Reference: https://confluence.atlassian.com/bitbucketserver/view-and-configure-the-audit-log-776640417.html

Logs

Audit

The Confluence integration collects audit logs from the audit log files or the audit API from self-hosted Confluence Data Center. It has been tested with Confluence 7.14.2 but is expected to work with newer versions. As of version 1.2.0, this integration added experimental support for Atlassian Confluence Cloud. JIRA Cloud only supports Basic Auth using username and a Personal Access Token.

Atlassian Bitbucket Integration Procedures

Please provide the following information to CyTech:

Collect Bitbucket audit logs via log files

-

Path

-

Preserve Original Event? (Enable Yes/No)

-

Preserves a raw copy of the original event, added to the field event.original

-

Tags

-

Processors (Optional)

-

Processors are used to reduce the number of fields in the exported event or to enhance the event with metadata. This executes in the agent before the logs are parsed.

-

Indexing settings (experimental) (Enable Yes/No)

-

Select data streams to configure indexing options. This is an experimental feature and may have effects on other properties.

Collect Bitbucket audit logs via API (Enable Yes/No)

-

API URL - The API URL without the path.

-

Bitbucket Username - JIRA Username. Needs to be used with a Password. Do not fill if you are using a personal access token.

-

Bitbucket Password - JIRA Password. Needs to be used with a Username. Do not fill if you are using a personal access token.

-

Personal Access Token - The Personal Access Token. If set, Username and Password will be ignored.

-

Initial Interval - Initial interval for the first API call. Defaults to 24 hours.

AWS Cloudtrails Integrations

Introduction

The AWS CloudTrail integration allows you to monitor AWS CloudTrail

Reference: https://aws.amazon.com/cloudtrail/

Use the AWS CloudTrail integration to collect and parse logs related to account activity across your AWS infrastructure. Then visualize that data in Kibana, create alerts to notify you if something goes wrong, and reference logs when troubleshooting an issue.

For example, you could use the data from this integration to spot unusual activity in your AWS accounts—like excessive failed AWS console sign in attempts.

The procedures described in Section 3 assumes that a Log Collector has already been setup.

Data streams

The AWS CloudTrail integration collects one type of data: logs.

Logs help you keep a record of every event that CloudTrail receives. These logs are useful for many scenarios, including security and access audits. See more details in the Logs reference.

Assumptions

The procedures described in Section 3 assumes that a Log Collector has already been setup.

Requirements

You need Elasticsearch for storing and searching your data and Kibana for visualizing and managing it. You can use our hosted Elasticsearch Service on Elastic Cloud, which is recommended, or self-manage the Elastic Stack on your own hardware.

Before using any AWS integration you will need:

-

AWS Credentials to connect with your AWS account.

-

AWS Permissions to make sure the user you're using to connect has permission to share the relevant data.

For more details about these requirements, see the AWS integration documentation.

Setup

Use this integration if you only need to collect data from the AWS CloudTrail service.

If you want to collect data from two or more AWS services, consider using the AWS integration. When you configure the AWS integration, you can collect data from as many AWS services as you'd like.

For step-by-step instructions on how to set up an integration, see the Getting started guide.

Logs reference

The cloudtrail data stream collects AWS CloudTrail logs. CloudTrail monitors events like user activity and API usage in AWS services. If a user creates a trail, it delivers those events as log files to a specific Amazon S3 bucket.

AWS CloudTrail integration Procedures

The following will need to be provided in the Configure integration when adding the AWS Audit CloudTrail integration.

Procedures:

Please provide the following information to CyTech:

Configure Integration

-

Collect CloudTrail logs from S3 (Enable)

-

Queue URL is required (URL of the AWS SQS queue that messages will be received from.)

-

Collect CloudTrail logs from CloudWatch(Optional)

-

Log Group ARN (ARN of the log group to collect logs from.)

-

Collect CloudTrail logs from third-party REST API (Optional)

-

URL of Splunk Enterprise Server (i.e. scheme://host:port, path is automatic)

-

Splunk REST API Username

-

Splunk REST API Password

-

Splunk Authorization Token (Bearer Token or Session Key, e.g. "Bearer eyJFd3e46..." or "Splunk 192fd3e...". Cannot be used with username and password.)

AWS GuardDuty Integrations

Introduction

The Amazon GuardDuty integration collects and parses data from Amazon GuardDuty Findings REST APIs.

The Amazon GuardDuty integration can be used in three different modes to collect data:

-

HTTP REST API - Amazon GuardDuty pushes logs directly to an HTTP REST API.

-

AWS S3 polling - Amazon GuardDuty writes data to S3 and Elastic Agent polls the S3 bucket by listing its contents and reading new files.

-

AWS S3 SQS - Amazon GuardDuty writes data to S3, S3 pushes a new object notification to SQS, Elastic Agent receives the notification from SQS, and then reads the S3 object. Multiple Agents can be used in this mode.

Assumptions

The procedures described in Section 3 assume that a Log Collector has already been setup.

Requirements

You need Elasticsearch for storing and searching your data and Kibana for visualizing and managing it. You can use our hosted Elasticsearch Service on Elastic Cloud, which is recommended, or self-manage the Elastic Stack on your own hardware.

Note: It is recommended to use AWS SQS for Amazon GuardDuty.

Aws GuardDuty integration Procedures

To collect data from AWS S3 Bucket, follow the steps below:

-

Configure the Data Forwarder to ingest data into an AWS S3 bucket. However, the user can set the parameter "Bucket List Prefix" according to the requirement.

To collect data from AWS SQS, follow the steps below:

-

If data forwarding to an AWS S3 bucket hasn't been configured, then first setup an AWS S3 bucket as mentioned in the documentation above.

-

To setup an SQS queue, follow "Step 1: Create an Amazon SQS queue" mentioned in the Documentation.

-

While creating an SQS queue, please provide the same bucket ARN that has been generated after creating the AWS S3 bucket.

-

Setup event notification for an S3 bucket. Follow this guide.

-

The user has to perform Step 3 for the guardduty data-stream, and the prefix parameter should be set the same as the S3 Bucket List Prefix as created earlier. For example, logs/ for guardduty data stream.

-

For all the event notifications that have been created, select the event type as s3:ObjectCreated:*, select the destination type SQS Queue, and select the queue that has been created in Step 2.

Note:

-

Credentials for the above AWS S3 and SQS input types should be configured according to the input configuration guide.

-

Data collection via AWS S3 Bucket and AWS SQS are mutually exclusive in this case.

To collect data from Amazon GuardDuty API, users must have an Access Key and a Secret Key. To create an API token follow the steps below:

-

Login to https://console.aws.amazon.com/.

-

Go to https://console.aws.amazon.com/iam/ to access the IAM console.

-

On the navigation menu, choose Users.

-

Choose your IAM user name.

-

Select Create access key from the Security Credentials tab.

-

To see the new access key, choose Show.

Note

-

The Secret Access Key and Access Key ID are required for the current integration package.

AWS Security Hub Integrations

Introduction

The AWS Security Hub integration collects and parses data from AWS Security Hub REST APIs.

Assumptions

The procedures described in Section 3 assumes that a Log Collector has already been setup.

Compatibility

-

This module is tested against AWS Security Hub API version 1.0.

Requirements

To collect data from AWS Security Hub APIs, users must have an Access Key and a Secret Key. To create API token follow below steps:

-

Login to https://console.aws.amazon.com/.

-

Go to https://console.aws.amazon.com/iam/ to access the IAM console.

-

On the navigation menu, choose Users.

-

Choose your IAM user name.

-

Select Create access key from the Security Credentials tab.

-

To see the new access key, choose Show.

Note:

-

For the current integration package, it is recommended to have interval in hours.

-

For the current integration package, it is compulsory to add Secret Access Key and Access Key ID.

Logs:

-

Findings - This is the securityhub_findings data stream.

-

Insights - This is the securityhub_insights data stream.

AWS Security Hub Integration Procedures

Please provide the following information to CyTech:

Collect AWS Security Hub logs via API

-

AWS Region - AWS Region.

Collect AWS Security Hub Insights from AWS

-

AWS Region - AWS Region.

AWS Integrations

Introduction

This document shows information related to AWS Integration.

The AWS integration is used to fetch logs and metrics from Amazon Web Services.

The usage of the AWS integration is to collect metrics and logs across many AWS services managed by your AWS account.

Assumptions

The procedures described in Section 3 assumes that a Log Collector has already been setup.

Requirements

Before using the AWS integration you will need:

-

AWS Credentials to connect with your AWS account.

-

AWS Permissions to make sure the user you're using to connect has permission to share the relevant data.

AWS Credentials

Use access keys directly (Option 1 Recommended)

Access keys are long-term credentials for an IAM user or the AWS account root user. To use access keys as credentials, you need to provide:

-

access_key_id: The first part of the access key.

-

secret_access_key: The second part of the access key.

Use an IAM role Amazon Resource Name (ARN)

To use an IAM role ARN, you need to provide either a credential profile or access keys along with the role_arn advanced option. role_arn is used to specify which AWS IAM role to assume for generating temporary credentials.

Instead of providing the access_key_id and secret_access_key directly to the integration, you will provide two advanced options to look up the access keys in the shared credentials file:

-

credential_profile_name: The profile name in shared credentials file.

Access keys are long-term credentials for an IAM user or the AWS account root user. To use access keys as credentials, you need to provide:

-

access_key_id: The first part of the access key.

-

secret_access_key: The second part of the access key.

AWS Permissions

Specific AWS permissions are required for the IAM user to make specific AWS API calls. To enable the AWS integration to collect metrics and logs from all supported services, make sure to give necessary permissions which CyTech to monitor.

Reference permissions:

-

ec2:DescribeInstances

-

ec2:DescribeRegions

-

cloudwatch:GetMetricData

-

cloudwatch:ListMetrics

-

iam:ListAccountAliases

-

rds:DescribeDBInstances

-

rds:ListTagsForResource

-

s3:GetObject

-

sns:ListTopics

-

sqs:ChangeMessageVisibility

-

sqs:DeleteMessage

-

sqs:ListQueues

-

sqs:ReceiveMessage

-

sts:AssumeRole

-

sts:GetCallerIdentity

-

tag:GetResources

AWS Integrations Procedures

Access Key ID and Secret Access Key:

These are associated with AWS Identity and Access Management (IAM) users and are used for programmatic access to AWS services. To find them:

-

Access the AWS Management Console.

-

Go to the "IAM" (Identity and Access Management) service.

-

Select the IAM user for which you want to retrieve the access keys.

-

Under the "Security credentials" tab, you can find the Access Key ID and you can create a new Secret Access Key if needed.

S3 Bucket ARN:

You can find the Amazon Resource Name (ARN) for an S3 bucket in the S3 Management Console or by using the AWS CLI. It typically looks like this:

-

Access the AWS Management Console.

-

Go to S3 Bucket Service

-

Navigate properties.

-

Find the ARN under Bucket overview

Sample: arn:aws:s3:::your-bucket-name

Log Group ARN:

Log Groups are associated with AWS CloudWatch Logs. You can find the ARN for a log group as follows:

-

Access the AWS Management Console.

-

Go to the "CloudWatch" service.

-

In the CloudWatch Logs section, select the log group you're interested in.

-

Select the log group that you want to open.

-

In the details of the log group, you will find the ARN.

SQS Queue URL: (Ignore if you're not using SQS Queue URL)

To find the URL of an Amazon Simple Queue Service (SQS) queue:

-

Access the AWS Management Console.

-

Go to the "SQS" service.

-

Select the specific queue you're interested in.

-

In the queue details, you can find the Queue URL

Please provide the following information to CyTech:

-

Access Key ID

-

Secret Access Key

- S3 Bucket ARN

- Log Group ARN

- SQS Queue URL : (Ignore if you're not using SQS Queue URL)

CISCO Meraki Integrations

Introduction

Cisco Meraki offers a centralized cloud management platform for all Meraki devices such as MX Security Appliances, MR Access Points and so on. Its out-of-band cloud architecture creates secure, scalable, and easy-to-deploy networks that can be managed from anywhere. This can be done from almost any device using web-based Meraki Dashboard and Meraki Mobile App. Each Meraki network generates its own events.

Assumptions

The procedures described in Section 3 assumes that a Log Collector has already been setup.

Compatibility

A syslog server can be configured to store messages for reporting purposes from MX Security Appliances, MR Access Points, and MS switches. This package collects events from the configured syslog server. The integration supports collection of events from "MX Security Appliances" and "MR Access Points". The "MS Switch" events are not recognized.

Requirements

Cisco Meraki Dashboard Configuration

SYSLOG

Cisco Meraki dashboard can be used to configure one or more syslog servers and Meraki message types to be sent to the syslog servers. Refer to Syslog Server Overview and Configuration page for more information on how to configure syslog server on Cisco Meraki.

API ENDPOINT (WEBHOOKS)

Cisco Meraki dashboard can be used to configure Meraki webhooks. Refer to the Webhooks Dashboard Setup section.

Configure the Cisco Meraki integration

SYSLOG

Depending on the syslog server setup in your environment check one/more of the following options "Collect syslog from Cisco Meraki via UDP", "Collect syslog from Cisco Meraki via TCP", "Collect syslog from Cisco Meraki via file".

Enter the values for syslog host and port OR file path based on the chosen configuration options.

API Endpoint (Webhooks)

Check the option "Collect events from Cisco Meraki via Webhooks" option.

-

Enter values for "Listen Address", "Listen Port" and "Webhook path" to form the endpoint URL. Make note of the Endpoint URL https://{AGENT_ADDRESS}:8686/meraki/events.

-

Enter value for "Secret value". This must match the "Shared Secret" value entered when configuring the webhook from Meraki cloud.

-

Enter values for "TLS". Cisco Meraki requires that the webhook accept requests over HTTPS. So you must either configure the integration with a valid TLS certificate or use a reverse proxy in front of the integration.

Log Events

Enable to collect Cisco Meraki log events for all the applications configured for the chosen log stream.

Logs

Syslog

The cisco_meraki.log dataset provides events from the configured syslog server. All Cisco Meraki syslog specific fields are available in the cisco_meraki.log field group.

API Endpoint (Webhooks)

Cisco Meraki Integration Procedures

Please provide the following information to CyTech:

- Collect syslog from Cisco Meraki via UDP

- Listen Address - The bind address to listen for UDP connections. Set to 0.0.0.0 to bind to all available interfaces.

- Listen Port - The UDP port number to listen on.

-

Collect syslog from Cisco Meraki via TCP

- Listen Address - The bind address to listen for TCP connections. Set to 0.0.0.0 to bind to all available interfaces.

- Listen Port - The UDP port number to listen on.

-

Collect syslog from Cisco Meraki via file

- Paths

- Collect syslog from Cisco Meraki via Webhooks

- Listen Address - Bind address for the listener. Use 0.0.0.0 to listen on all interfaces.

- Listen Port

CISCO Secure Endpoint Integrations

Introduction

Secure Endpoint offers cloud-delivered, advanced endpoint detection and response across multidomain control points to rapidly detect, contain, and remediate advanced threats.

Assumptions

The procedures described in Section 3 assume that a Log Collector has already been setup.

Requirements

This integration is for Cisco Secure Endpoint logs. It includes the following datasets for receiving logs over syslog or read from a file:

-

event dataset: supports Cisco Secure Endpoint Event logs.

Generating Client ID and API Key:

- Log in to your AMP for Endpoints Console.

- Go to Accounts > Organization Settings.

- Click Configure API Credentials under Features to generate the Client ID and secure API Key.

Logs

Secure Endpoint

The event dataset collects Cisco Secure Endpoint logs.

What can the Secure Endpoint API be used for?

-

Generate a list of organizations a user has access to

-

Generate a list of policies for a specified organization

-

Generate specific information about a specified policy such as:

-

-

-

-

General policy data

-

Associated network control lists

-

Associated computers

-

Associated groups

-

Proxy settings

-

Policy XML

-

-

-

-

Generate all policy types and operating systems available for a specified organization

Top Use Cases

-

Generating reports on policy settings across an organization

-

Inspecting a particular policy's settings

-

Querying to find policies matching certain criteria in order to detect which policies should be edited

Response Format

-

Data

-

Meta

-

Errors

Cisco Secure Endpoint Integration Procedures

Please provide the following information to CyTech:

Collect logs from the Cisco Secure Endpoint API.

-

Client ID - Cisco Secure Endpoint Client ID

-

API Key - Cisco Secure Endpoint API Key

CISCO Umbrella Integrations

Introduction

Cisco Umbrella is a cloud security platform that provides an additional line of defense against malicious software and threats on the internet by using threat intelligence. That intelligence helps prevent adware, malware, botnets, phishing attacks, and other known bad Websites from being accessed.

Assumptions

The procedures described in Section 3 assumes that a Log Collector has already been setup.

Prerequisites

-

-

You must have Full Admin access to Umbrella to create and manage Umbrella API keys or Umbrella KeyAdmin API keys.

-

Requirements

This integration is for Cisco Umbrella. It includes the following datasets for receiving logs from an AWS S3 bucket using an SQS notification queue and Cisco Managed S3 bucket without SQS:

-

log dataset: supports Cisco Umbrella logs.

Logs

Umbrella

When using Cisco Managed S3 buckets that does not use SQS there is no load balancing possibilities for multiple agents, a single agent should be configured to poll the S3 bucket for new and updated files, and the number of workers can be configured to scale vertically.

The log dataset collects Cisco Umbrella logs.

Advantages of Integrating with the Umbrella API

The Umbrella API features a number of improvements over the Umbrella v1 APIs and the Umbrella Reporting v2 API.

-

Intuitive base URI

-

API paths defined by top-level scopes

-

Intent-based, granular API key scopes

-

API key expiration

-

Updated API key administration dashboard views

-

Programmatic API key administration

-

API authentication and authorization supported by OAuth 2.0 client credentials flow

-

Portable, programmable API interface for client integrations

Before you send a request to the Umbrella API, you must create new Umbrella API credentials and generate an API access token. For more information, see Umbrella API Authentication.

https://developer.cisco.com/docs/cloud-security/authentication/#authentication

Authentication

The Umbrella API provides a standard REST interface and supports the OAuth 2.0 client credentials flow. To get started, log in to Umbrella and create an Umbrella API key. Then, use your API credentials to generate an API access token.

Note: API keys, passwords, secrets, and tokens allow access to your private customer data. You should never share your credentials with another user or organization.

Log in to Umbrella

-

Log in to Umbrella with the following URL: https://dashboard.umbrella.com

-

You can find your username after Admin in the navigation tree. Confirm that your organization appears under your username.

Create Umbrella API Key

Create an Umbrella API key ID and key secret.

Note: You have only one opportunity to copy your API secret. Umbrella does not save your API secret and you cannot retrieve the secret after its initial creation.

Refresh Umbrella API Key

Refresh an Umbrella API key ID and key secret.

Note: You have only one opportunity to copy your API secret. Umbrella does not save your API secret and you cannot retrieve the secret after its initial creation.

Update Umbrella API Key

Update an Umbrella API key.

Cisco Secure Endpoint Integration Procedures

Please provide the following information to CyTech:

Collect logs from the Cisco Umbrella

-

Queue URL - URL of the AWS SQS queue that messages will be received from. For Cisco Managed S3 buckets or S3 without SQS, use Bucket ARN.

-

Bucket ARN - Required for Cisco Managed S3. If the S3 bucket does not use SQS, this is the address for the S3 bucket, one example is arn:aws:s3:::cisco-managed-eu-central-1 For a list of Cisco Managed buckets, please see https://docs.umbrella.com/mssp-deployment/docs/enable-logging-to-a-cisco-managed-s3-bucket.

-

Bucket Region - Required for Cisco Managed S3. The region the bucket is located in.

-

Bucket List Prefix - Required for Cisco Managed S3. This sets the root folder of the S3 bucket that should be monitored, found in the S3 Web UI. Example value: 1235_654vcasd23431e5dd6f7fsad457sdf1fd5.

-

Number of Workers - Required for Cisco Managed S3. Number of workers that will process the S3 objects listed. Minimum is 1.

-

Bucket List Interval - Time interval for polling listing of the S3 bucket. Defaults to 120s.

-

Access Key ID

-

Secret Access Key

If you need further assistance, kindly contact our support at support@cytechint.com for prompt assistance and guidance.

Cloudflare Integration

Introduction

Cloudflare integration uses Cloudflare's API to retrieve audit logs and traffic logs from Cloudflare, for a particular zone, and ingest them into Elasticsearch. This allows you to search, observe and visualize the Cloudflare log events through Elasticsearch.

Users of Cloudflare use Cloudflare services to increase the security and performance of their web sites and services.

To enable the Cloudflare Logpush, please refer to Section 5. Currently, the procedures described is for the setup of Amazon S3.

Assumptions

The procedures described in Section 3 assumes that a Log Collector has already been setup.

Requirements

Configure Cloudflare audit logs data stream

Enter values "Auth Email", "Auth Key" and "Account ID".

-

-

-

Auth Email is the email address associated with your account.

-

Auth Key is the API key generated on the "My Account" page.

-

Account ID can be found on the Cloudflare dashboard. Follow the navigation documentation from here.

-

-

Configure Cloudflare logs

These logs contain data related to the connecting client, the request path through the Cloudflare network, and the response from the origin web server. For more information see here.

The integration can retrieve Cloudflare logs using -

-

-

-

Auth Email and Auth Key

-

API Token More information is available here.

-

-

CONFIGURE USING AUTH EMAIL AND AUTH KEY

Enter values "Auth Email", "Auth Key" and "Zone ID".

-

-

- Auth Email is the email address associated with your account.

- Auth Key is the API key generated on the "My Account" page.

- Zone ID can be found here.

-

CONFIGURE USING API TOKEN

Enter values "API Token" and "Zone ID".

For the Cloudflare integration to be able to successfully get logs the following permissions must be granted to the API token -

-

Account.Access: Audit Logs: Read

-

-

- API Tokens allow for more granular permission settings.

- Zone ID can be found here.

-

Logs

Audit

Audit logs summarize the history of changes made within your Cloudflare account. Audit logs include account-level actions like login and logout, as well as setting changes to DNS, Crypto, Firewall, Speed, Caching, Page Rules, Network, and Traffic features, etc.

Logpull

These logs contain data related to the connecting client, the request path through the Cloudflare network, and the response from the origin web server.

Cloudflare Integration Procedures

Please provide the following information to CyTech:

See the Screenshot Below

Audit Logs

-

Auth Email -

-

Auth Key

-

Account ID

Cloudflare Logs

-

Auth Token

-

Zone ID

Enable Logpush to Amazon S3

To enable the Cloudflare Logpush service:

-

Log in to the Cloudflare dashboard.

-

Select the Enterprise account or domain you want to use with Logpush.

-

Go to Analytics & Logs > Logs.

-

Select Add Logpush job. A modal window opens where you will need to complete several steps.

-

Select the dataset you want to push to a storage service.

-

Select the data fields to include in your logs. Add or remove fields later by modifying your settings in Logs > Logpush.

-

Select Amazon S3.

-

Enter or select the following destination information:

-

-

-

Bucket path

-

Daily subfolders

-

Bucket region

-

Encryption constraint in bucket policy

-

For Grant Cloudflare access to upload files to your bucket, make sure your bucket has a policy (if you did not add it already):

-

Copy the JSON policy, then go to your bucket in the Amazon S3 console and paste the policy in Permissions > Bucket Policy and click Save.

-

-

-

Click Validate access.

-

Enter the Ownership token (included in a file or log Cloudflare sends to your provider) and click Prove ownership. To find the ownership token, click the Open button in the Overview tab of the ownership challenge file.

-

Click Save and Start Pushing to finish enabling Logpush.

Once connected, Cloudflare lists Amazon S3 as a connected service under Logs > Logpush. Edit or remove connected services from here.

Crowdstrike Integrations

Introduction

This integration is for CrowdStrike products. It includes the following datasets for receiving logs:

falcon dataset consists of endpoint data and Falcon platform audit data forwarded from Falcon SIEM Connector.

fdr dataset consists of logs forwarded using the Falcon Data Replicator.

Assumptions

The procedures described in Section 3 assume that a Log Collector has already been setup.

Compatibility

This integration supports CrowdStrike Falcon SIEM-Connector-v2.0.

Requirements

Logs

Falcon

Contains endpoint data and CrowdStrike Falcon platform audit data forwarded from Falcon SIEM Connector.

FDR

The CrowdStrike Falcon Data Replicator (FDR) allows CrowdStrike users to replicate FDR data from CrowdStrike managed S3 buckets. CrowdStrike writes notification events to a CrowdStrike managed SQS queue when new data is available in S3.

This integration can be used in two ways. It can consume SQS notifications directly from the CrowdStrike managed SQS queue or it can be used in conjunction with the FDR tool that replicates the data to a self-managed S3 bucket and the integration can read from there.

In both cases SQS messages are deleted after they are processed. This allows you to operate more than one Elastic Agent with this integration if needed and not have duplicate events, but it means you cannot ingest the data a second time.

CrowdStrike Integration Procedures

Please provide the following information to CyTech:

Collect CrowdStrike Falcon Data Replicator logs (input: aws-s3) Option 1

-

AWS: Access Key ID

-

AWS: Secret Access Key

-

AWS: Queue URL - URL of the AWS SQS queue that messages will be received from.

Collect CrowdStrike logs via API. Option 2 (Recommended)

-

Client ID: Client ID for the CrowdStrike.

-

Client Secret: Client Secret for the CrowdStrike.

-

URL: Token URL of CrowdStrike.

Dropbox Integrations

Introduction

Connecting Dropbox

Use the Workplace Search Dropbox connector to automatically capture, sync and index the following items from your Dropbox service:

Stored Files

Including ID, File Metadata, File Content, Updated by, and timestamps.

Dropbox Paper

Including ID, Metadata, Content, Updated by, and timestamps.

This document helps you configure and connect your Dropbox service to Workplace Search. To do this, you must register your Elastic deployment in the Dropbox Developer platform, by creating an OAuth 2.0 app. This gives your app permission to access Dropbox data. You will need your Workplace Search OAuth redirect URL, so have that handy.

If you need a primer on OAuth, read the official OAuth 2.0 authorization flow RFC. The diagrams provide a good mental model of the protocol flow. Dropbox also has its own OAuth guide.

Assumptions

The procedures described in Section 3 assumes that a Log Collector has already been setup.

Requirements

Configuring the Dropbox Connector

-

To register your Elastic deployment with Dropbox, create a new OAuth app in your organization’s Dropbox developer platform. Make sure to use a trusted and stable Dropbox account.

-

Provide basic information about the app and define the access scopes, or specific permissions, the app needs to interact with Dropbox. A good rule of thumb is that these should be read-only permissions. Choose only the following permissions:

-

-

-

files.content.read

-

sharing.read

-

account_info.read

-

files.metadata.read

-

-

To fetch document-level permissions, you must create an OAuth App using a team-owned (Dropbox Business) account. Enable document-level permissions by adding the following permissions:

-

-

-

team_info.read

-

team_data.member

-

team_data.team_space

-

members.read

-

-

-

Register a redirect URL for the app to use. This is where the OAuth 2.0 service will return the user after they authorize the application. This is the Workplace Search OAuth redirect URL for your deployment. This must be a https endpoint for production use cases. Only use http for local development.

-

Find and record the client_id and client_secret for the app. Dropbox calls these App Key and App Secret.

-

Switch back to your organization’s Workplace Search administrative dashboard

-

In the Sources —> Add Source tab, add a new Dropbox source

-

Configure the service with Workplace Search using the App Key and App Secret.

Your Dropbox service is now configured, and you can connect it to Workplace Search.

Dropbox Integration Procedures

Connecting Dropbox to Workplace Search

Once the Dropbox connector is configured, you can connect a Dropbox instance to your organization’s Workplace Search deployment.

-

Follow the Dropbox authentication flow as presented in the Workplace Search administrative dashboard.

-

If the authentication flow succeeds, you will be redirected to Workplace Search.

Your Dropbox content becomes searchable as soon as syncing starts. Once configured and connected, Dropbox synchronizes automatically every 2 hours.

Limiting the content to be indexed

If you don’t need to index all available content, you can use the API to apply indexing rules. This reduces indexing load and overall index size. See Customizing indexing.

The path_template and file_extension rules are applicable for Dropbox.

Synchronized fields

The following table lists the fields synchronized from the connected source to Workplace Search. The attributes in the table apply to the default search application, as follows:

-

Display name - The label used when displayed in the UI

-

Field name - The name of the underlying field attribute

-

Faceted filter - whether the field is a faceted filter by default, or can be enabled (see also: Customizing filters)

-

Automatic query refinement preceding phrases - The default list of phrases that must precede a value of this field in a search query in order to automatically trigger query refinement. If "None," a value from this field may trigger refinement regardless of where it is found in the query string. If '', a value from this field must be the first token(s) in the query string. If N.A., automatic query refinement is not available for this field by default. All fields that have a faceted filter (default or configurable) can also be configured for automatic query refinement; see also Update a content source, Get a content source’s automatic query refinement details and Customizing filters.

F5 Integrations

Introduction

This document shows information related to F5 Integration.

The F5 BIG-IP integration allows users to monitor LTM, AFM, APM, ASM, and AVR activity. F5 BIG-IP covers software and hardware designed around application availability, access control, and security solutions.

The F5 BIG-IP integration can be used in three different modes to collect data:

HTTP Endpoint mode - F5 BIG-IP pushes logs directly to an HTTP endpoint hosted by users’ Elastic Agent.

AWS S3 polling mode - F5 BIG-IP writes data to S3 and Elastic Agent polls the S3 bucket by listing its contents and reading new files.

AWS S3 SQS mode - F5 BIG-IP writes data to S3, S3 pushes a new object notification to SQS, Elastic Agent receives the notification from SQS, and then reads the S3 object. Multiple Agents can be used in this mode.

For example, users can use the data from this integration to analyze the traffic that passes through their F5 BIG-IP network.

Data streams

The F5 BIG-IP integration collects one type of data stream: log.

Log help users to keep a record of events happening on the network using telemetry streaming. The log data stream collected by the F5 BIG-IP integration includes events that are related to network traffic. See more details in the Logs.

This integration targets the five types of events as mentioned below:

LTM provides the platform for creating virtual servers, performance, service, protocol, authentication, and security profiles to define and shape users’ application traffic. For more information, refer to the link here.

AFM is designed to reduce the hardware and extra hops required when ADC's are paired with traditional firewalls and helps to protect traffic destined for the user's data center. For more information, refer to the link here.

APM provides federation, SSO, application access policies, and secure web tunneling and allows granular access to users' various applications, virtualized desktop environments, or just go full VPN tunnel. For more information, refer to the link here.

ASM is F5's web application firewall (WAF) solution. It allows users to tailor acceptable and expected application behavior on a per-application basis. For more information, refer to the link here.

AVR provides detailed charts and graphs to give users more insight into the performance of web applications, with detailed views on HTTP and TCP stats, as well as system performance (CPU, memory, etc.). For more information, refer to the link here.

Assumptions

The procedures described in Section 3 assumes that a Log Collector has already been setup.

Requirements

Elasticsearch is needed to store and search data, and Kibana is needed for visualizing and managing it. You can use our hosted Elasticsearch Service on Elastic Cloud, which is recommended, or self-manage the Elastic Stack on your hardware.

The reference link for requirements of telemetry streaming is here.

-

https://clouddocs.f5.com/products/extensions/f5-telemetry-streaming/latest/prereqs.html

The reference link for requirements of Application Services 3(AS3) Extension is here.

-

https://clouddocs.f5.com/products/extensions/f5-appsvcs-extension/latest/userguide/prereqs.html

This module has been tested against F5 BIG-IP version 16.1.0, Telemetry Streaming version 1.32.0 and AS3 version 3.40.0.

Setup

To collect LTM, AFM, APM, ASM, and AVR data from F5 BIG-IP, the user has to configure modules in F5 BIG-IP as per the requirements.

To set up the F5 BIG-IP environment, users can use the BIG-IP system browser-based Configuration Utility or the command line tools that are provided. For more information related to the configuration of F5 BIG-IP servers, refer to F5 support website here.

https://support.f5.com/csp/knowledge-center/software

Configuration of Telemetry Streaming in F5

For downloading and installing Telemetry Streaming, refer to the link here.

https://clouddocs.f5.com/products/extensions/f5-telemetry-streaming/latest/installation.html

Telemetry Streaming will send logs in the JSON format to the destination. Telemetry Streaming is compatible with BIG-IP versions 13.0 and later. Users have to prepare F5 servers for it and set up the Telemetry Streaming Consumer.

To use telemetry streaming, user have to send POST request on https://<BIG-IP>/mgmt/shared/telemetry/declare for declaration.

F5 BIG-IP modules named LTM, AFM, ASM, and APM are not configured by Telemetry Streaming, they must be configured with AS3 or another method. Reference link for setup AS3 extension in F5 BIG-IP is here.

To configure logging using AS3, refer to the link here.

To collect data from AWS S3 Bucket, follow the below steps:

- Create an Amazon S3 bucket. Refer to the link here.

https://docs.aws.amazon.com/AmazonS3/latest/userguide/create-bucket-overview.html

- The default value of the "Bucket List Prefix" is listed below. However, the user can set the parameter "Bucket List Prefix" according to the requirement.

To collect data from AWS SQS, follow the below steps:

- If data forwarding to an AWS S3 Bucket hasn't been configured, then first set up an AWS S3 Bucket as mentioned in the above documentation.

- To set up an SQS queue, follow "Step 1: Create an Amazon SQS queue" mentioned in the Documentation. https://docs.aws.amazon.com/AmazonS3/latest/userguide/ways-to-add-notification-config-to-bucket.html

-

-

While creating an SQS Queue, please provide the same bucket ARN that has been generated after creating an AWS S3 Bucket.

-

- Set up event notifications for an S3 bucket. Follow this link. https://docs.aws.amazon.com/AmazonS3/latest/userguide/enable-event-notifications.html

-

- Users have to set the prefix parameter the same as the S3 Bucket List Prefix as created earlier. (for example, log/ for a log data stream.)

- Select the event type as s3:ObjectCreated:*, select the destination type SQS Queue, and select the queue that has been created in Step 2.

Note:

- Credentials for the above AWS S3 and SQS input types should be configured using the link. https://www.elastic.co/guide/en/beats/filebeat/current/filebeat-input-aws-s3.html#aws-credentials-config

- Data collection via AWS S3 Bucket and AWS SQS are mutually exclusive in this case.

Enabling the integration in Elastic

-

In Kibana go to Management > Integrations.

-

In the "Search for integrations" search bar, type F5 BIG-IP.

-

Click on F5 BIG-IP integration from the search results.

-

Click on the Add F5 BIG-IP button to add F5 BIG-IP integration.

-

Enable the Integration to collect logs via AWS S3 or HTTP endpoint input.

F5 BIG-IP integration

The "Integration name" and either the " Description" and the following will need to be provided in the Configure integration when adding the F5 BIG-IP integration.

Procedures:

Please provide the following information to CyTech:

Collect F5 BIG-IP logs via HTTP Endpoint:

-

Listen Address - The bind address to listen for http endpoint connections. Set to 0.0.0.0 to bind to all available interfaces.

F5 BIG-IP logs via HTTP Endpoint:

-

Listen Port - The port number the listener binds to.

Fortinet-Fortigate Integrations

Introduction

This integration is for Fortinet FortiGate logs sent in the syslog format.

Pre-requisite:

Configure syslog on FortiGate

From the GUI:

- Log into FortiGate.

- Select Log & Report to expand the menu.

- Select Log Settings.

-

Toggle Send Logs to Syslog to Enabled.

-

Enter the Syslog Collector IP address. Note: IP Address must be host's IP Address where the Elastic-Agent is installed. (For example. 192.168.1.19 as shown below)

If it is necessary to customize the port or protocol or setup the Syslog from the CLI below are the commands:

config log syslogd setting

set status enable

set server "192.168.1.19" -- change IP Address to same as host's where Elastic Agent is installed

set mode udp

set port 514

end

To establish the connection to the Syslog Server using a specific Source IP Address, use the below CLI configuration:

config log syslogd setting

set status enable

set server "192.168.1.19" -- change ip address to match host's IP

set source-ip "172.16.1.1" -- change ip address to match host's source-ip address

set mode udp

set port 514

end

Assumptions

The procedures described in Section 3 assumes that a Log Collector has already been setup.

Compatibility

This integration has been tested against FortiOS versions 6.x and 7.x up to 7.4.1. Newer versions are expected to work but have not been tested.

Note

- When using the TCP input, be careful with the configured TCP framing. According to the Fortigate reference, framing should be set to

rfc6587when the syslog mode is reliable.

Fortinet FortiGate Integration Procedures

Please provide the following information to CyTech:

Collect Fortinet FortiGate logs (input: tcp)

-

Listen Address - The bind address to listen for TCP connections.

-

Listen Port - The TCP port number to listen on.

Collect Fortinet FortiGate logs (input: udp)

-

Listen Address - The bind address to listen for UDP connections.

-

Listen Port - The UDP port number to listen on.

If you need further assistance, kindly contact our support at info@cytechint.com for prompt assistance and guidance.

GCP Integrations

Introduction

This document shows information related to GCP Integration.

The Google Cloud integration collects and parses Google Cloud Audit Logs, VPC Flow Logs, Firewall Rules Logs and Cloud DNS Logs that have been exported from Cloud Logging to a Google Pub/Sub topic sink and collects Google Cloud metrics and metadata from Google Cloud Monitoring.

Requirements

To use this Google Cloud Platform (GCP) integration, the following needs to be set up:

-

Service Account with a Role. (Section 3.1.1)

-

Service Account Key to access data on your GCP project. (Section 3.1.2)

Service Accounts

First, please create a Service Account. A Service Account (SA) is a particular type of Google account intended to represent a non-human user who needs to access the GCP resources.

The Log Collector uses the SA (Service Account) to access data on Google Cloud Platform using the Google APIs.

Here is a reference from Google related to Service Accounts:

https://cloud.google.com/iam/docs/best-practices-for-securing-service-accounts

Service Account with a Role

You need to grant your Service Account (SA) access to Google Cloud Platform resources by assigning a role to the account. In order to assign minimal privileges, create a custom role that has only the privileges required by the Log Collector. Those privileges are below:

-

compute.instances.list (required for GCP Compute instance metadata collection) (**2)

-

monitoring.metricDescriptors.list

-

monitoring.timeSeries.list

-

pubsub.subscriptions.consume

-

pubsub.subscriptions.create (*1)

-

pubsub.subscriptions.get

-

pubsub.topics.attachSubscription (*1)

*1 Only required if Agent is expected to create a new subscription. If you create the subscriptions yourself, you may omit these privileges.

**2 Only required if corresponding collection will be enabled.

After you have created the custom role, assign the role to your service account.

Service Account Key

Next, with the Service Account (SA) with access to Google Cloud Platform (GCP) resources setup in Section 3.1.1, you need some credentials to associate with it: a Service Account Key.

From the list of SA (Service Accounts):

-

Click the one you just created to open the detailed view.

-

From the Keys section, click "Add key" > "Create new key" and select JSON as the type.

-

Download and store the generated private key securely (remember, the private key can't be recovered from GCP if lost).

GCP Integrations Procedures

-

GCP Audit Logs

The audit dataset collects audit logs of administrative activities and accesses within your Google Cloud resources.

Procedures

The "Project Id" and either the "Credentials File" or "Credentials JSON" will need to be provided in the integration UI when adding the Google Cloud Platform integration.

Please provide the following information to CyTech:

-

Project ID

The Project ID is the Google Cloud project ID where your resources exist.

-

Credentials File vs JSON

Based on your preference, specify the information in either the Credentials File OR the Credentials JSON field.

OPTION 1: CREDENTIALS FILE

Save the JSON file with the private key in a secure location of the file system, and make sure that the Elastic Agent has at least read-only privileges to this file.

Specify the file path in the Elastic Agent integration UI in the "Credentials File" field. For example: /home/ubuntu/credentials.json.

OPTION 2: CREDENTIALS JSON

Specify the content of the JSON file you downloaded from Google Cloud Platform directly in the Credentials JSON field in the Elastic Agent integration.

-

GCP DNS Logs

The dns dataset collects queries that name servers resolve for your Virtual Private Cloud (VPC) networks, as well as queries from an external entity directly to a public zone.

Procedures

The "Project Id" and either the "Credentials File" or "Credentials JSON" will need to be provided in the integration UI when adding the Google Cloud Platform integration.

Please provide the following information to CyTech:

- Project ID